This is a quick brain dump of my recent musings on feasibility of a WebAssembly-based in-browser emulator for x86 and x86-64 processors… partially in the hopes of freeing up my brain for main project work. ;)

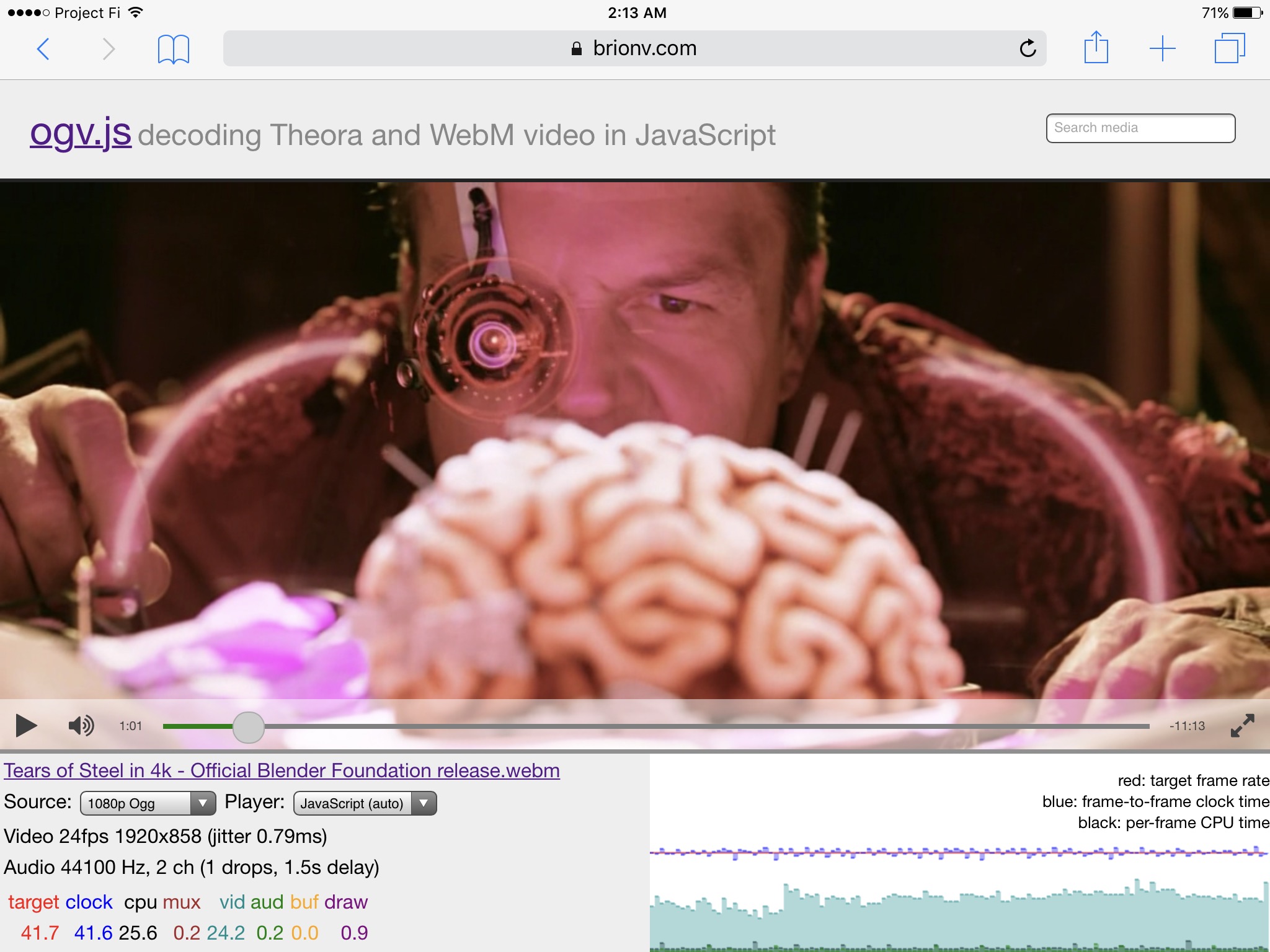

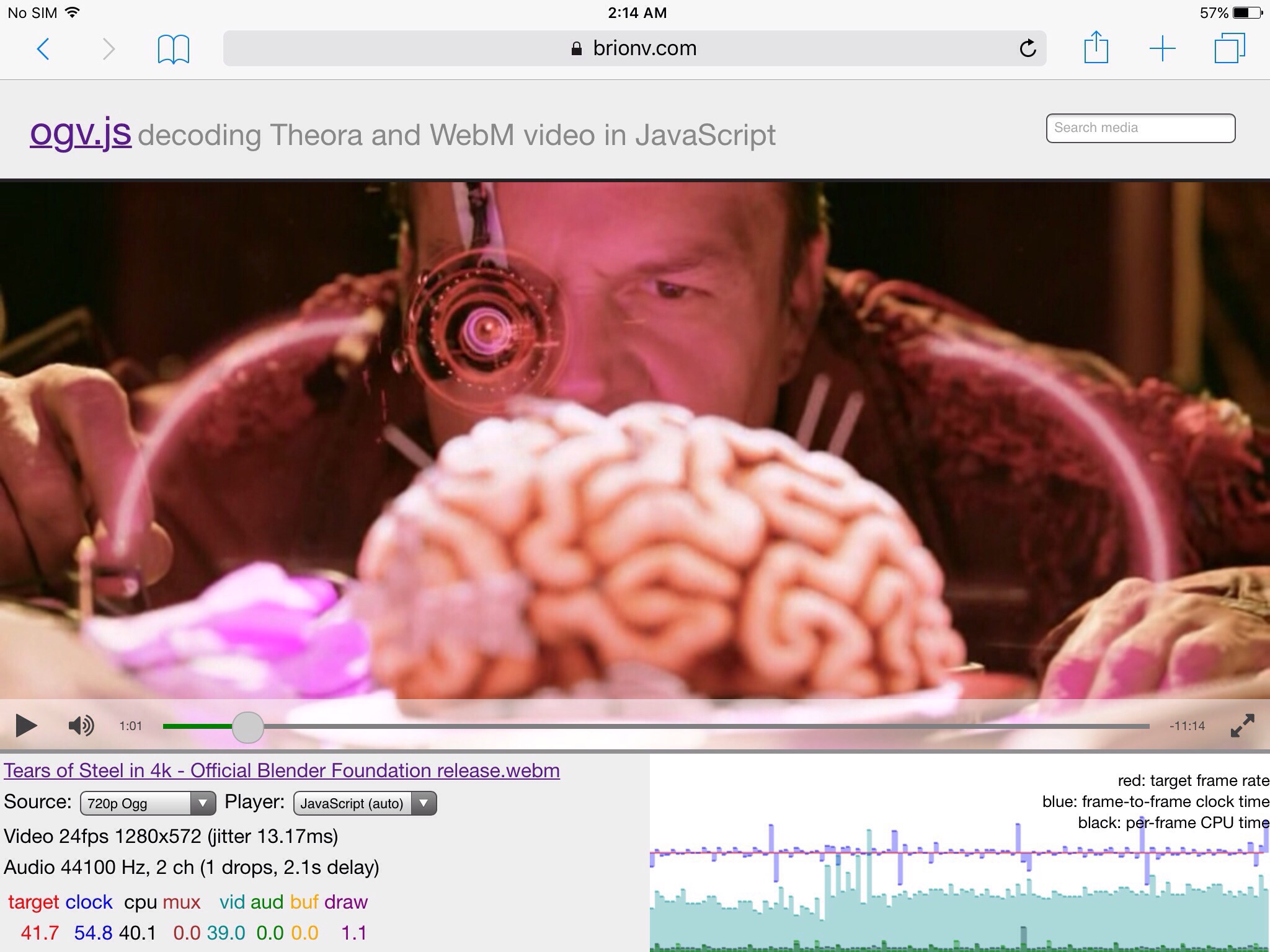

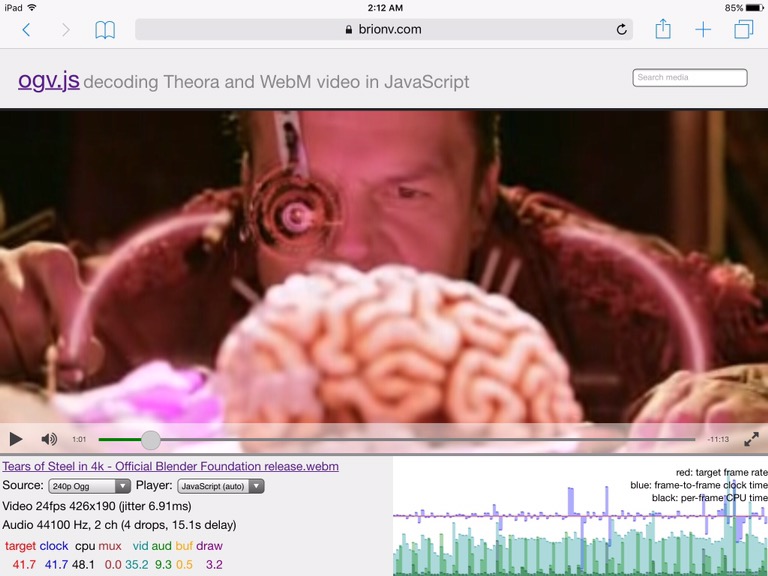

My big side project for some time has been ogv.js, an in-browser video player framework which uses emscripten to cross-compile C libraries for the codecs into JavaScript or, experimentally, the new WebAssembly target. That got me interested in how WebAssembly works at the low level, and how C/C++ programs work, and how we can mishmash them together in ways never intended by gods or humans.

Specifically, I’m thinking it would be fun to make an x86-64 Linux process-level emulator built around a WebAssembly implementation. This would let you load a native Linux executable into a web browser and run it, say, on your iPad. Slowly. :)

System vs process emulation

System emulators provide the functioning of an entire computer system, with emulated software-hardware interfaces: you load up a full kernel-mode operating system image which talks to the emulated hardware. This is what you use for playing old video games, or running an old or experimental operating system. This can require emulating lots of detail behavior of a system, which might be tricky or slow, and programs may not integrate with a surrounding environment well because they live in a tiny computer within a computer.

Process emulators work at the level of a single user-mode process, which means you only have to emulate up to the system call layer. Older Mac users may remember their shiny new Intel Macs running old PowerPC applications through the “Rosetta” emulator for instance. QEMU on Linux can be set up to handle similar cross-arch emulated execution, for testing or to make some cross-compilation scenarios easier.

A process emulator has some attraction because the model is simpler inside the process… If you don’t have to handle interrupts and task switches, you can run more instructions together in a row; elide some state changes; all kinds of fun things. You might not have to implement indirect page tables for memory access. You might even be able to get away with modeling some function calls as function calls, and loops as loops!

WebAssembly instances and Linux processes

There are many similarities, which is no coincidence as WebAssembly is designed to run C/C++ programs similarly to how they work in Linux/Unix or Windows while being shoehornable into a JavaScript virtual machine. :)

An instantiated WebAssembly module has a “linear memory” (a contiguous block of memory addressable via byte indexing), analogous to the address space of a Linux process. You can read and write int and float values of various sizes anywhere you like, and interpretation of bytewise data is up to you.

Like a native process, the module can request more memory from the environment, which will be placed at the end. (“grow_memory” operator somewhat analogous to Linux “brk” syscall, or some usages of “mmap”.) Unlike a native process, usable memory always starts at 0 (so you can dereference a NULL pointer!) and there’s no way to have a “sparse” address space by mapping things to arbitrary locations.

The module can also have “global variables” which live outside this address space — but they cannot be dynamically indexed, so you cannot have arrays or any dynamic structures there. In WebAssembly built via emscripten, globals are used only for some special linking structures because they don’t quite map to any C/C++ construct, but hand-written code can use them freely.

The biggest difference from native processes is that WebAssembly code doesn’t live in the linear memory space. Function definitions have their own linear index space (which can’t be dynamically indexed: references are fixed at compile time), plus there’s a “table” of indirect function references (which can be dynamically indexed into). Function pointers in WebAssembly thus aren’t actually pointers to the instructions in linear memory like on native — they’re indexes into the table of dynamic function references.

Likewise, the call stack and local variables live outside linear memory. (Note that C/C++ code built with emscripten will maintain its own parallel stack in linear memory in order to provide arrays, variables that have pointers taken to them, etc.)

WebAssembly’s actual opcodes are oriented as a stack machine, which is meant to be easy to verify and compile into more efficient register-based code at runtime.

Branching and control flow

In WebAssembly control flow is limited, with one-way branches possible only to a containing block (i.e. breaking out of a loop). Subroutine calls are only to defined functions (either directly by compile-time reference, or indirectly via the function table)

Control flow is probably the hardest thing to make really match up from native code — which lets you jump to any instruction in memory from any other — to compiled WebAssembly.

It’s easy enough to handle craaaazy native branching in an interpreter loop. Pseudocode:

loop {

instruction = decode_instruction(ip)

instruction.execute() // update ip and any registers, etc

}

In that case, a JMP or CALL or whatever just updates the instruction pointer when you execute it, and you continue on your merry way from the new position.

But what if we wanted to eke more performance out of it by compiling multiple instructions into a single function? That lets us elide unnecessary state changes (updating instruction pointers, registers, flags, etc when they’re immediately overridden) and may even give opportunity to let the compiler re-optimize things further.

A start is to combine runs of instructions that end in a branch or system call (QEMU calls them “translation units”) into a compiled function, then call those in the loop instead of individual instructions:

loop {

tu = cached_or_compiled_tu(ip)

tu.execute() // update registers, ip, etc as we go

}

So instead of decoding and executing an instruction at a time, we’re decoding several instructions, compiling a new function that runs them, and then running that. Nice, if we have to run it multiple times! But…. possibly not worth as much as we want, since a lot of those instruction runs will be really short, and there’ll be function call overhead on every run. And, it seems like it would kill CPU branch prediction and such, by essentially moving all branches to a single place (the tu.execute()).

QEMU goes further in its dynamic translation emulators, modifying the TUs to branch directly to each other in runtime discovery. It’s all very funky and scary looking…

But QEMU’s technique of modifying trampolines in the live code won’t work as we can’t modify running code to insert jump instructions… and even if we could, there are no one-way jumps, and using call instructions risks exploding the call stack on what’s actually a loop (there’s no proper tail call optimization in WebAssembly).

Relooper

What can be done, though, is to compile bigger, better, badder functions.

When emscripten is generating JavaScript or WebAssembly from your C/C++ program’s LLVM intermediate language, it tries to reconstruct high-level control structures within each function from a more limited soup of local branches. These then get re-compiled back into branch soup by the JIT compiler, but efficiently. ;)

The binaryen WebAssembly code gen library provides this “relooper” algorithm too: you pass in blocks of instructions, possible branches, and the conditions around them, and it’ll spit out some nicer branch structure if possible, or an ugly one if not.

I’m pretty sure it should be possible to take a detected loop cycle of separate TUs and create a combined TU that’s been “relooped” in a way that it is more efficient.

BBBBuuuuutttttt all this sounds expensive in terms of setup. Might want to hold off on any compilation until a loop cycle is detected, for instance, and just let the interpreter roll on one-off code.

Modifying runtime code in WebAssembly

Code is not addressable or modifiable within a live module instance; unlike in native code you can’t just write instructions into memory and jump to the pointer.

In fact, you can’t actually add code to a WebAssembly module. So how are we going to add our functions at runtime? There are two tricks:

First, multiple module instances can use the same linear memory buffer.

Second, the tables for indirect function calls can list “foreign” functions, such as JavaScript functions or WebAssembly functions from a totally unrelated module. And those tables are modifiable at runtime (from the JavaScript side of the border).

These can be used to do full-on dynamic linking of libraries, but all we really need is to be able to add a new function that can be indirect-called, which will run the compiled version of some number of instructions (perhaps even looping natively!) and then return back to the main emulator runtime when it reaches a branch it doesn’t contain.

Function calls

Since x86 has a nice handy CALL instruction, and doesn’t just rely on convention, it could be possible to model calls to already-cached TUs as indirect function calls, which may perform better than exiting out to the loop and coming back in. But they’d probably need to be guarded for early exit, for several reasons… if we haven’t compiled the entirety of the relooped code path from start to exit of the function, then we have to exit back out. A guard check on IP and early-return should be able to do that in a fairly sane way.

function tu_1234() {

// loop

do {

// calc loop condition -> set zero_flag

ip = 1235

if !zero_flag {

break

}

ip = 1236

// CALL 4567

tu = cached_or_compiled_tu(4567)

tu.execute()

if ip != 1236 {

//Â only partway through. back to emulator loop,

// possibly unwinding a long stack :)

return

}

// more code

ip

}

}

I think this makes some kind of sense. But if we’re decoding instructions + creating output on the fly, it could take a few iterations through to produce a full compiled set, and exiting a loop early might be … ugly.

It’s possible that all this is a horrible pipe dream, or would perform too bad for JIT compilation anyway.

But it could still be fun for ahead-of-time compilation. ;) Which is complicated… a lot … by the fact that you don’t have the positions of all functions known ahead of time. Plus, if there’s dynamic linking or JIT compilation inside the process, well, none of that’s even present ahead of time.

Prior art: v86

I’ve been looking at lot at v86, a JavaScript-based x86 system emulator. v86 is a straight-up interpreter, with instruction decoding and execution mixed together a bit, but it feels fairly straightforwardly written and easy to follow when IÂ look at things in the code.

v86 uses a set of aliased typed arrays for the system memory, another set for the register file, and then some variables/properties for misc flags and things.

Some quick notes:

- a register file in an array means accesses at difference sizes are easy (al vs ax vs eax), and you can easily index into it from the operand selector bits from the instruction (as opposed to using a variable per register)

- is there overhead from all the object property accesses etc? would it be more efficient to do everything within a big linear memory?

- as a system emulator there’s some extra overhead to things like protected mode memory accesses (page tables! who knows what!) that could be avoided on a per-process model

- 64-bit emulation would be hard in JavaScript due to lack of 64-bit integers (argh!)

- as an interpreter, instruction decode overhead is repeated during loops!

- to avoid expensive calculations of the flags register bits, most arithmetic operations that would change the flags instead save the inputs for the flag calculations, which get done on demand. This still is often redundant because flags may get immediately rewritten by the next instruction, but is cheaper than actually calculating them.

WebAssembly possibilities

First, since WebAssembly supports only one linear memory buffer at a time, the register file and perhaps some other data would need to live there. Most likely want a layout with the register file and other data at the beginning of memory, with the rest of memory after a fixed point belonging to the emulated process.

Putting all the emulator’s non-RAM state in the beginning means a process emulator can request more memory on demand via Linux ‘brk’ syscall, which would be implemented via the ‘grow_memory’ operator.

64-bit math

WebAssembly supports 64-bit integer memory accesses and arithmetic, unlike JavaScript! The only limitation is that you can’t (yet) export a function that returns or accepts an i64 to or from JavaScript-land. That means if we keep our opcode implementations in WebAssembly functions, they can efficiently handle 64-bit ops.

However WebAssembly’s initial version allows only 32-bit memory addressing. This may not be a huge problem for emulating 64-bit processes that don’t grow that large, though, as long as the executable doesn’t need to be loaded at a specific address (which would mean a sparse address space).

Sparse address spaces could be emulated with indirection into a “real” memory that’s in a sub-4GB space, which would be needed for a system emulator anyway.

Linux details

Statically linked ELF binaries would be easiest to model. More complex to do dynamic linking, need to pass a bundle of files in and do fix-ups etc.

Questions: are executables normally PIC as well as libraries, or do they want a default load address? (Which would break the direct-memory-access model and require some indirection for sparse address space.)

Answer: normally Linux x86_64 executables are not PIE, and want to be loaded at 0x400000 or maybe some other random place. D’oh! But… in the common case, you could simplify that as a single offset.

Syscall on 32-bit is ‘int $80’, or ‘syscall’ instruction on 64-bit. Syscalls would probably mostly need to be implemented on the JS side, poking at the memory and registers of the emulated process state and then returning.

To do network i/o would probably need to be able to block and return to the emulator… so like a function call bowing out early due to an uncompiled branch being taken, would potentially need an “early exit” from the middle of a combined TU if it does a syscall that ends up being async. On the other hand, if a syscall can be done sync, might be nice not to pay that penalty.

Could also need async syscalls for multi-process stuff via web workers… anything that must call back to main thread would need to do async.

For 64-bit, JS code would have to …. painfully … deal with 32-bit half-words. Awesome. ;)

Multiprocessing

WebAssembly initial version has no facility for multiple threads accessing the same memory, which means no threads. However this is planned to come in future…

Processes with separate address spaces could be implemented by putting each process emulator in a Web Worker, and having them communicate via messages sent to the main thread through syscalls. This forces any syscall that might need global state to be async.

Prior art: Browsix

Browsix provides a POSIX-like environment based around web techs, with processes modeled in Web Workers and syscalls done via async messages. (C/C++ programs can be compiled to work in Browsix with a modified emscripten.) Pretty sweet ideas. :)

I know they’re working on WebAssembly processes as well, and were looking into synchronous syscalls vi SharedArrayBuffer/Atomics as well, so this might be an interesting area to watch.

Could it be possible to make a Linux binary loader for the Browsix kernel? Maybe!

Would it be possible to make graphical Linux binaries work, with some kind of JS X11 or Wayland server? …mmmmmmaaaaybe? :D

Closing thoughts

This all sounds like tons of fun, but may have no use other than learning a lot about some low-level tech that’s interesting.