There were a fair number of folks interested in video chatting at Wikimania! A few quick updates:

An experimental ‘Schnittserver’ (‘Clip server’) project has been in the works for a while with some funding from ze Germans; currently sitting at http://wikimedia.meltvideo.

The Schnittserver can also do server-side rendering of projects using the ‘melt’ format such as those created with Kdenlive and Shotcut — this allows uploading your original footage (usually in some sort of MP4/H.264 flavor) and sharing the editing project via WebM proxy clips, without generational loss on the final rendering.

Once rendered, your final WebM output can be published up to Commons.

I would love to see some more support for this project, including adding a better web front-end for managing projects/clips and even editing…

Mozilla has an in-browser media editor thing called Popcorn.js; they’re unfortunately reducing investment in the project, but there’s some talk among people working on it and on our end that Wikimedia might be interested in helping adapt it to work with the Schnittserver or some future replacement for it.

Unfortunately I missed the session with the person working on Popcorn.js, will have to catch up later on it!

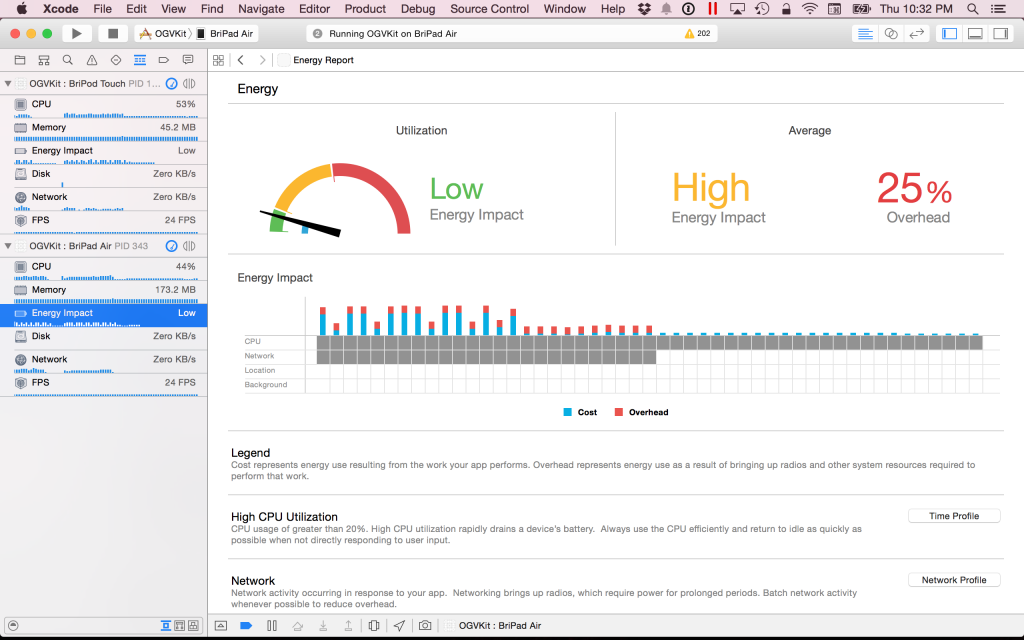

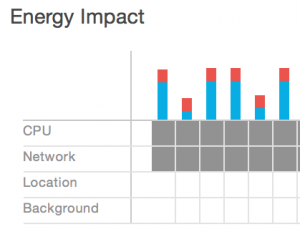

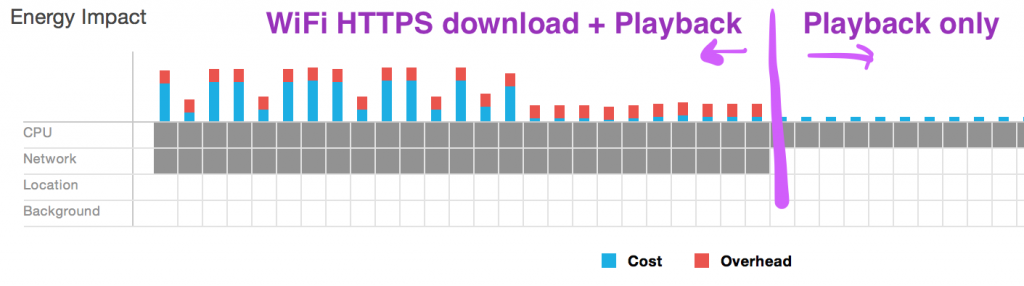

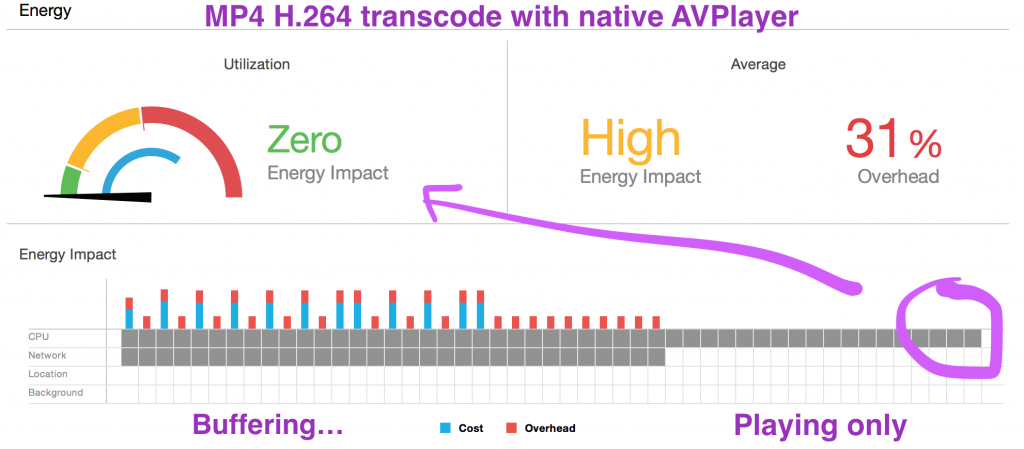

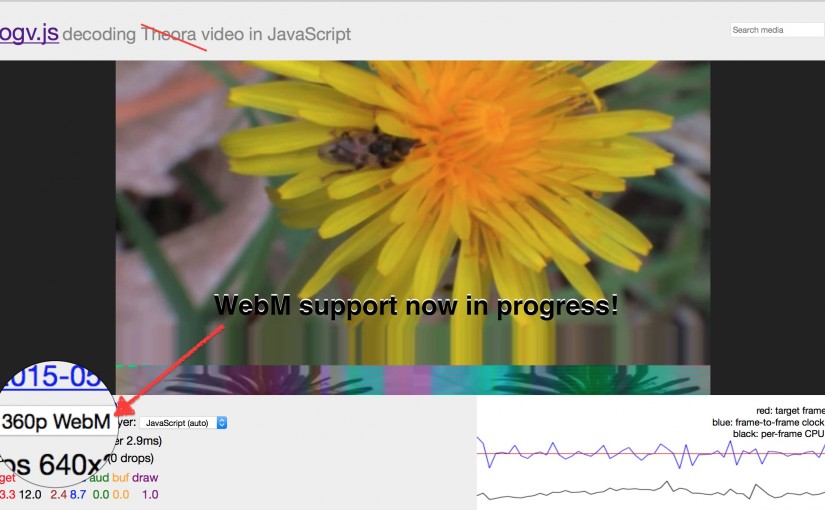

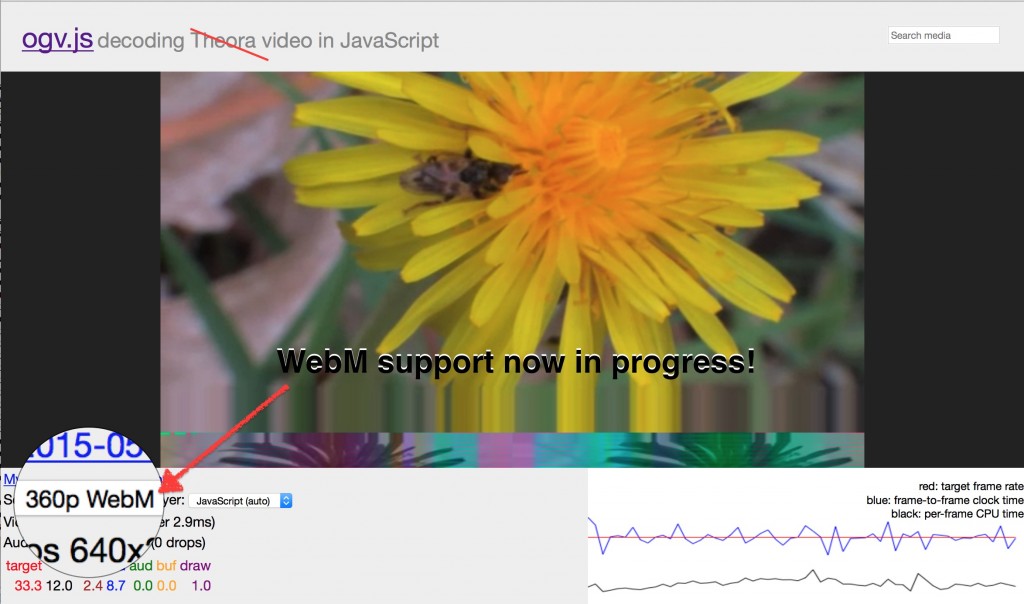

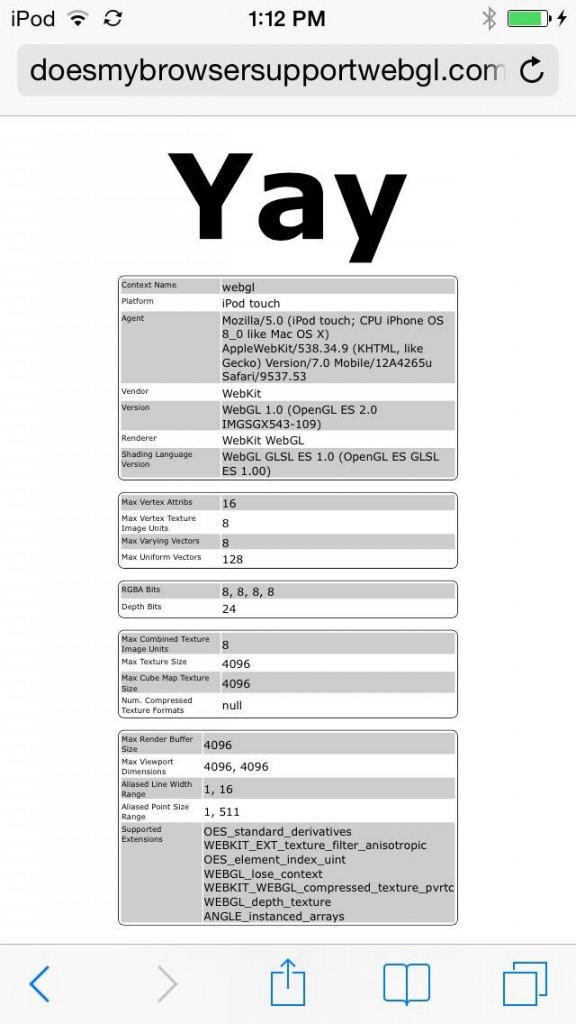

I’m very close to what I consider a 1.0 release of ogv.js, my JavaScript shim to play Ogg (and experimentally WebM) video and audio in Safari and MS IE/Edge without plugins.

Recently fixed some major sound sync bugs on slower devices, and am finishing up controls which will be used in the mobile view (when not using the full TimedMediaHandler / MwEmbedPlayer interface which we still have on the desktop).

Demo of playback at https://brionv.com/misc/

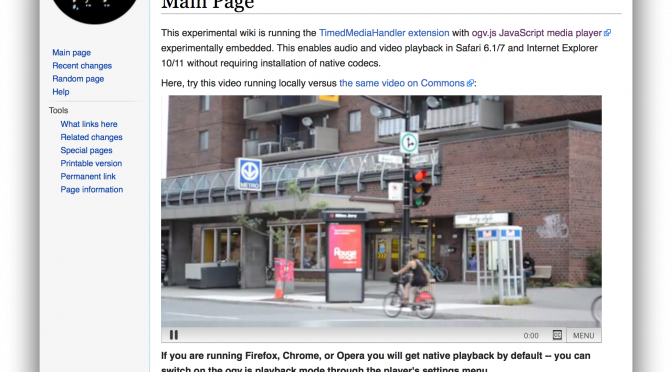

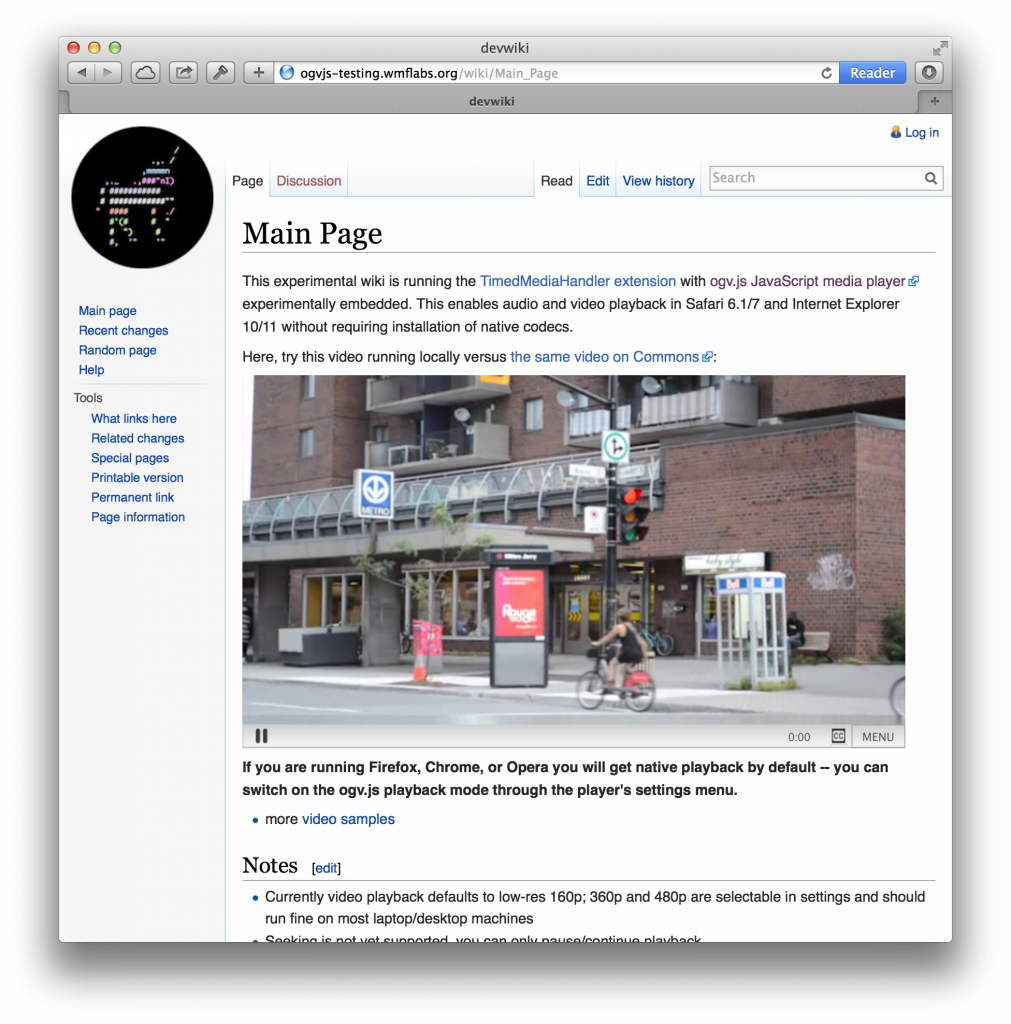

A slightly older version of ogv.js is also running on https://ogvjs-testing.wmflabs.

Infrastructure issues:

I had a talk with Faidon about video requirements on the low-level infrastructure layer; there are some things we need to work on before we really push video:

– seeking/streaming a file with Range subsets causes requests to bypass the Varnish cache layer, potentially causing huge performance problems if there’s a usage spike!

– very large files can’t be sharded cleanly over multiple servers, which makes for further performance bottlenecks on popular files again

– VERY large files (>4G or so) can’t be stored at all; which is a problem for high-quality uploads of things like long Wikimania talks!

For derivative transcodes, we can bypass some of these problems by chunking the output into multiple files of limited length and rigging up ‘gapless playback’, as can be done for HLS or MPEG-DASH-style live streaming. I’m pretty sure I can work out how to do this in the ogv.js player (for Safari and IE) as well as in the native <video> element playback for Chrome and Firefox via Media Source Extensions. Assuming it works with the standard DASH profile for WebM, this is something we can easily make work on Android as well using Google’s ExoPlayer.

DASH playback will also make it easier to use adaptive source switching to handle limited bandwidth or CPU resources.

However we still need to be able to deal with source files which may be potentially quite large…

List and phab projects!

As a reminder there’s a wikivideo-l list: https://lists.wikimedia.

and a Wikimedia-Video project tag in phabricator: https://

Folks who are interested in pushing further work on video, please feel free to join up. There’s a lot of potential awesomeness!