One of my favorite programming samples is calculating a visualization of the Mandelbrot set, a famous fractal. I’ve added it to my experimental JS-like scripting engine to exercise the floating-point performance:

Using the tagged-pointer scheme in my earlier post for storage of variant local types, there was the disadvantage that double-precision floating point numbers (64 bits wide) would not fit in the 31 bits of tagged-pointer space, so they had to be “boxed” (allocated on the heap as an object). This made for runtimes on the Mandelbrot set demo approximately 200x slower than the original JS running in node. Ouch!

I decided to reimplement NaN boxing (which allows storing full 64-bit doubles or a pointer or an int32, all in a single 64-bit word), this time following the JavaScriptCore method that’s easier to unbox pointers versus the SpiderMonkey method. Now I can flip my code between NaN-boxing and heap-boxing with a compile-time #define and compare!

original js: 9 ms native w/ NaN box: 48 ms wasm with NaN box: 71 ms native w/ box doubles: 2677 ms wasm with box doubles: 1794 ms

NaN-boxing *screams* versus heap-boxing. In part it’s the indirection, but I think it’s mostly having to allocate a hojillion objects and then clean them up, as every temporary result in calculations gets turned into a live object and then garbage-collected after a while. (Note that the native build on my MacBook Pro is slower than the wasm build with heap boxing, probably due to a different allocator and 32-bit vs 64-bit pointers!)

But do we really have to do all that temporary allocation? Most math operators only return a specific type (-, *, and / always return doubles; + can return a string depending on its input). For now the JS code is hand-translated to C++ using operator overloads, so having my C++ operator overloads return doubles when possible, and accept them when possible, lets temporaries that can only be doubles skip the type-checking and boxing/unboxing overhead.

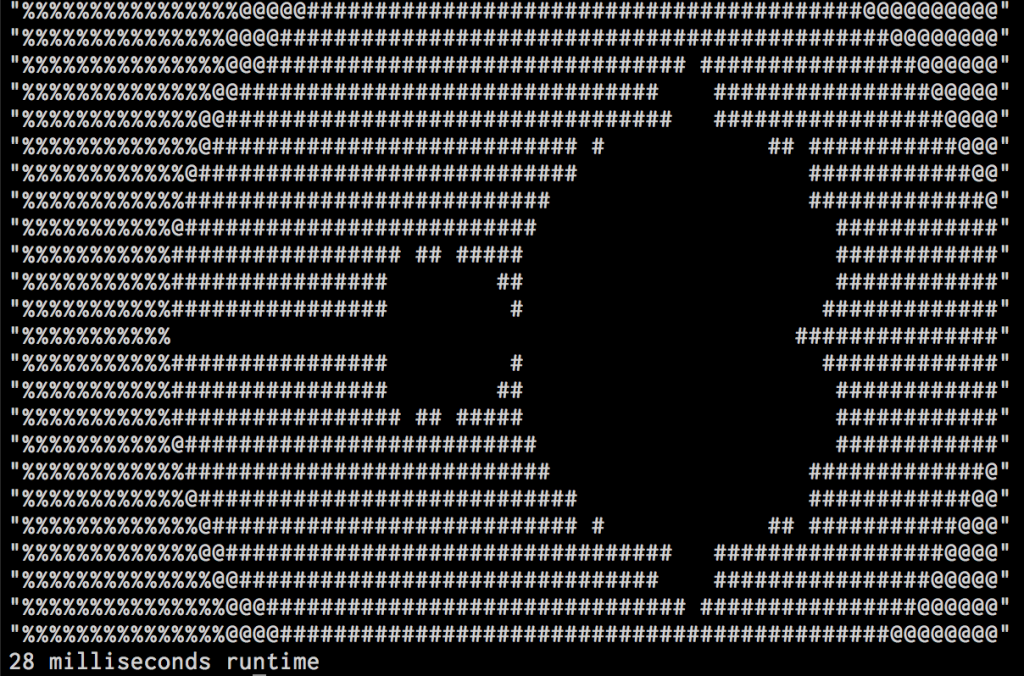

original js: 9 ms native w/ NaN box: 17 ms wasm with NaN box: 28 ms native w/ box doubles: 467 ms wasm with box doubles: 248 ms

Boxing doubles on the heap is still really slow, but a lot less slow thanks to sidestepping it on many intermediate values… And with the 64-bit NaN-boxing we’re only about 3x slower than native node when running in wasm in node, a big improvement that I’m really happy with!

This makes me feel that 64-bit NaN boxing is the way to go; it’s only slightly more complex than tagging a pointer-sized word and it makes floating point math a ton faster when it’s needed, which sometimes it just is.