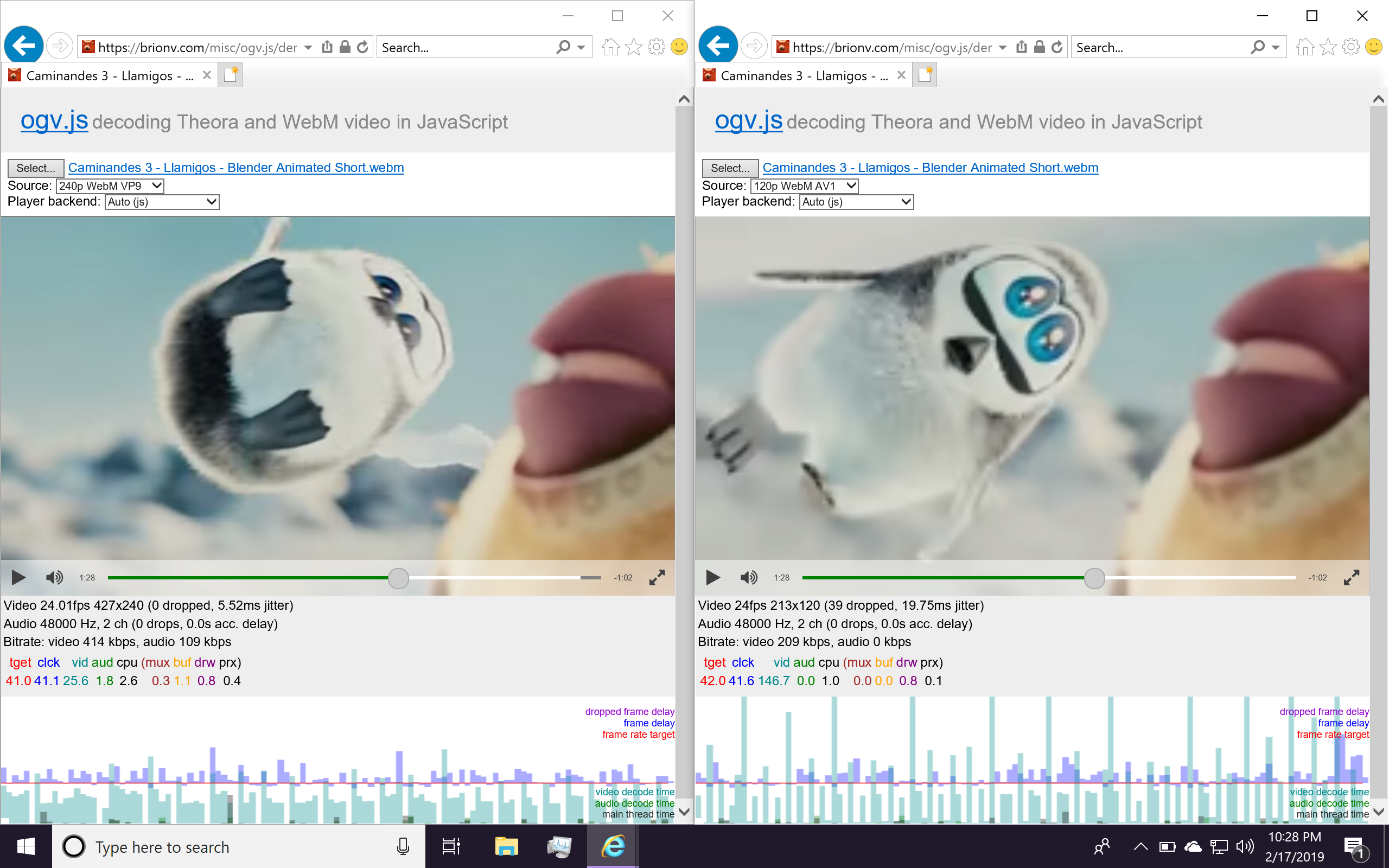

While benchmarking the AV1 and VP9 video decoders in ogv.js, I bemoaned the lack of SIMD vector operations in WebAssembly. Native builds of these decoders lean heavily on SIMD (AVX and SSE for x86, Neon for ARM, etc) to perform operations on 8 or 16 pixels at once… Turns out there has been movement on the WebAssembly SIMD proposal after all!

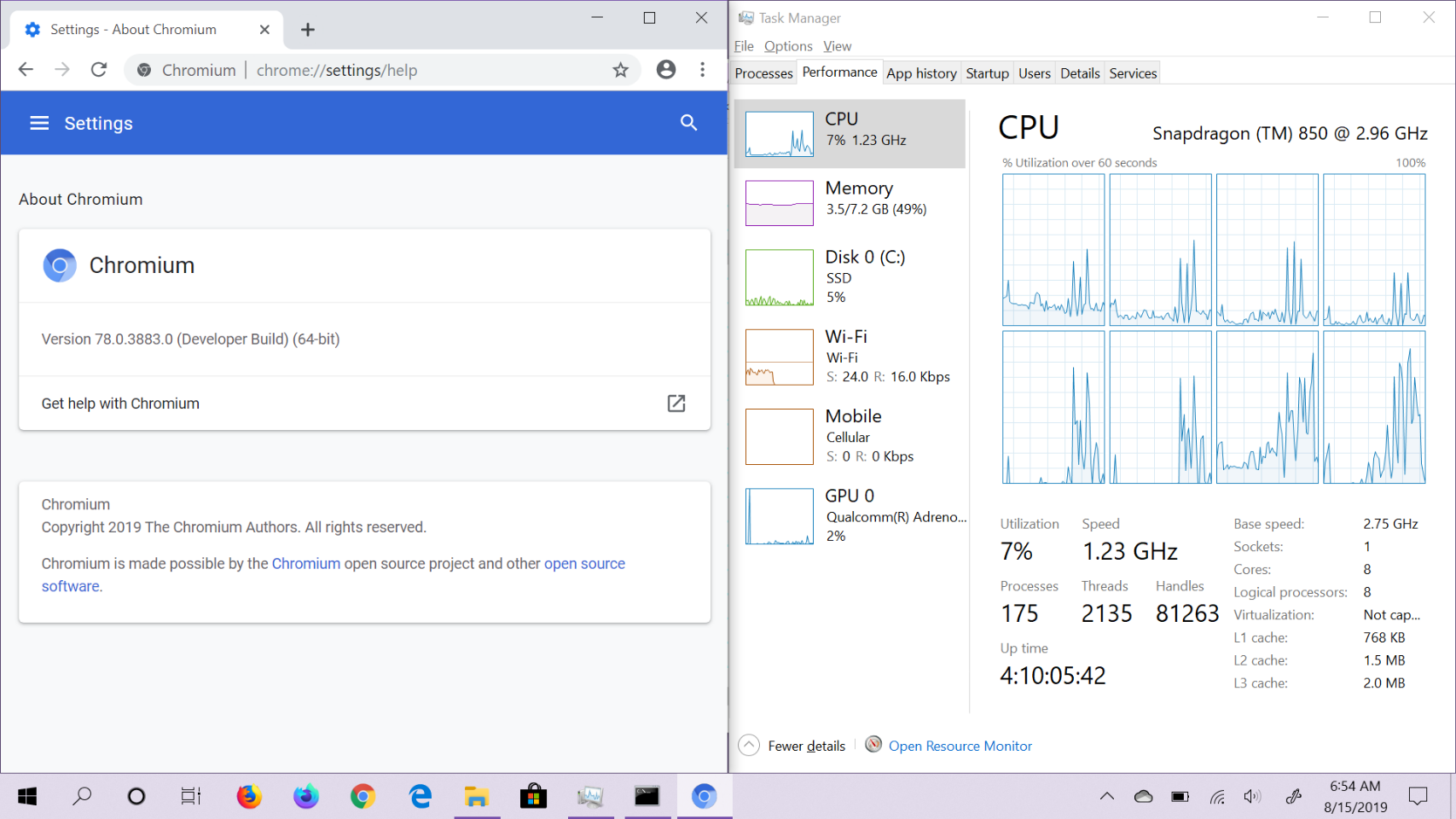

Chrome’s V8 engine has implemented it (warning: somewhat buggy still), and the upstream LLVM Wasm code generator will generate code for it using clang’s vector operations and some intrinsic functions.

emscripten setup

The first step in your SIMD journey is to set up your emscripten development environment for the upstream compiler backend. Install emsdk via git — or update it if you’ve got an old copy.

Be sure to update the tags as well:

./emsdk update-tags

If you’re on Linux you can download a binary installation, but there’s a bug in emsdk that will cause it not to update. (Update: this was fixed a few days ago, so make sure to update your emsdk!)

./emsdk install latest-upstream

./emsdk activate latest-upstream

On Mac or Windows, or to install the latest upstream source on purpose, you can have it build the various tools from source. There’s not a convenient “sdk” catch-all tag for this that I can see, so you may need to call out all the bits:

./emsdk install emscripten-incoming-64bit

./emsdk activate emscripten-incoming-64bit

./emsdk install upstream-clang-master-64bit

./emsdk activate upstream-clang-master-64bit

./emsdk install binaryen-master-64bit

./emsdk activate binaryen-master-64bit

First build may take a couple hours or so, depending on your machine.

Re-running the install steps will update the git checkouts and re-build, which doesn’t take as long as a fresh build usually but can still take some time.

Upstream backend differences

Be warned that with the upstream backend, emscripten cannot build asm.js, only WebAssembly. If you’re making mixed JS & WebAssembly builds this may complicate your build process, because you have to switch back.

You can switch back to the current fastcomp backend at any time by swapping your sdk state back:

./emsdk install latest

./emsdk activate latest

Note that every time you switch, the cached libc will get rebuilt on your next emcc invocation.

Currently there are some code-gen issues where the upstream backend produces more local variables and such than the older fastcomp backend, which can cause a slight slowdown in code of a few % (and for me, a bigger slowdown in Safari which does particularly well with the old compiler’s output). This is being actively worked on, and is expected to improve significantly soon.

Starting Chrome

Now you’ve got a compiler; you’ll also need a browser to run your code in. Chrome’s V8 includes support behind an experimental runtime flag; currently it’s not exposed to the user interface so you must pass it on the command line.

I recommend using Chrome Canary on Mac or Windows, or a nightly Chromium build on Linux, to make sure you’ve got any fixes that may have come in recently.

On Mac, one can start it like so:

/Applications/Google\ Chrome\ Canary.app/Contents/MacOS/Google\ Chrome\ Canary --js-flags="--experimental-wasm-simd"

If you forget the command-line flag or get it wrong, the module compilation won’t validate the SIMD instructions and will throw an exception, so you can’t run by mistake. (This is also apparently how you’re meant to test for SIMD support presence, AFAIK… compile a module and see if it works?)

Beware there are a few serious bugs in the V8 implementation, which may trip you up. In particular watch out for broken splat which can produce non-deterministic errors. For this reason I recommend disabling autovectorization for now, since you have no control of workarounds on the clang end. Non-constant bit shifts also fail to validate, requiring a scalar workaround.

Vector ops in clang

If you’re not working in C/C++ but are implementing your own Wasm compiler or hand-writing Wasm source, skip this section! ;) You’ll want to checkout the SIMD proposal documentation for a list of available instructions.

First, forget anything you may have heard about emscripten including MMX compatibility headers (xmmintrin.h etc). They’ve been recently removed as they’re broken and misleading.

There’s also emscripten/vector.h but it seems obsolete as well with some references to functions that don’t exist that were from the old SIMD.js implementation, and I recommend avoiding it for now.

The good news is, a bunch of vector stuff “just works” using standard clang syntax, and there’s a few intrinsic functions for particular operations like the bitselect instruction and vector shuffling.

First, take some plain ol’ code and compile it with SIMD enabled:

emcc -o foo.html -O3 -s SIMD=1 -fno-vectorize foo.c

It’s important for now to disable autovectorization since it tends to break on the V8 splat bug for me. In the future, you’ll want to leave it on to squeeze out the occasional performance increase without manual intervention.

If you’re not using headers which predefine standard vector types for you, you can create some convenient aliases like so (these are just the types I’ve used so far):

typedef int16_t int16x8 __attribute((vector_size(16)));

typedef uint8_t uint8x16 __attribute((vector_size(16)));

typedef uint16_t uint16x8 __attribute((vector_size(16)));

typedef uint32_t uint32x4 __attribute((vector_size(16)));

typedef uint64_t uint64x2 __attribute((vector_size(16)));

The expected float and signed/unsigned integer interpretations for 128 bits are available, and you can freely cast between them to reinterpret bit sizes.

To work around bugs in the “splat” operation that expands a scalar to a vector, I made inline helper functions for myself:

static volatile int junk = 0;

static inline int16x8 splat_const(const int16_t val) {

// Use this only on constants due to the v8 splat bug!

return (int16x8){

val, val, val, val,

val, val, val, val

};

}

static inline int16x8 splat_vec(const int16_t val) {

// Try to work around issues with broken splat in V8

// by forcing the input to be something that won't be reused.

const int guarded = val + junk;

return (int16x8){

guarded, guarded, guarded, guarded,

guarded, guarded, guarded, guarded

};

}

Once the bug is fixed, it’ll be safe to remove the ‘junk’ and ‘guarded’ bits and use a single splat helper function. Though I’m sure there’s got to be a visibly clearer way to do a splat than manually writing out all the lanes and having the compiler coalesce them into a single splat operation? o_O

The bitselect operation is also frequently necessary, especially since convenient operations like min, max, and abs aren’t available on integer vectors. You might or might not be able to do this some cleaner way without the builtin, but this seems to work:

static inline int16x8 select_vec(const int16x8 cond,

const int16x8 a,

const int16x8 b) {

return (int16x8)__builtin_wasm_bitselect(a, b, cond);

}

Note the order of parameters on the bitselect instruction and the builtin has the condition last — I found this maddening so my helper function has the condition first where my code likes it.

You can now make your own vector abs function:

static inline int16x8 abs_vec(const int16x8 v) {

return select_vec(v < splat_const(0), -v, v);

}

Note the < and – operators “just work” on the vectors, we only needed helper functions for the bitselect and the splat. And I’m still not confident I need those two?

You’ll also likely need the vector shuffle operation, which there’s a standard builtin for. For instance here I’m deinterleaving 16-bit pixels into 8-bit pixels and the extra high bytes:

static inline uint8x16 merge_pixels(const int16x8 work) {

return (uint8x16)__builtin_shufflevector((uint8x16)work, (uint8x16)work,

0, 2, 4, 6, 8, 10, 12, 14, // the 8 pixels we worked on

1, 3, 5, 7, 9, 11, 13, 15 // zeroes we don't need

);

}

Checking compiler output

To figure out what’s going on it helps a lot to disassemble the WebAssembly output to confirm what instructions are actually emitted — especially if you’re hoping to report a bug. Refer to the SIMD proposal for details of instructions and types used.

If you compile with -g your .wasm output will include function names, which make it much easier to read the disassembly!

Use wasm-dis like so:

wasm-dis foo.wasm > foo.wat

Load up the .wat in your code editor of choice (there are syntax highlighting plugins available for VS Code and I think Atom etc) and search for your function in the mountain of stuff.

Note in particular that bit-shift operations currently can produce big sequences of lane-shuffling and scalar bit-shifts. This is due to the LLVM compiler working around a V8 bug with bit-shifts, and will be fixed soon I hope.

If you wish, you can modify the Wasm source in the .wat file and re-assemble it to test subtle changes — use wasm-as for this.

Reporting bugs

You probably will encounter bugs — this is very bleeding-edge stuff! The folks working on it want your feedback if you’re working in this area, so please make the most of it by providing reproducible test cases for any bugs you encounter that aren’t chalked down to the existing splat argument corruption and non-constant shift bugs.

And beware that until the splat bug is fixed, non-deterministic problems are really easy to pop up.

The various trackers:

- emscripten

- LLVM/clang

- Chrome/V8:

- spec feedback: