Another part of implementing a JavaScript-like engine to run inside a WebAssembly sandbox is memory management: JS requires garbage collection because objects may hold references to each other, creating cycles that simpler schemes like reference counting can’t break.

Mark and sweep

Most JS engines implement some form of mark-and-sweep garbage collection, usually enhanced with various fancy features we won’t get into here (moving/compaction, generational sweeps, etc).

The mark phase involves tracing your way through the object graph. Starting with globals and objects that are held in variables, you mark the object, setting a flag unique to that object. For any references it holds to other objects, you repeat — whenever you reach an object that’s already been marked you can skip over to the next one without re-tracing its references.

Any object that didn’t get marked is considered unreachable, and gets deleted in the sweep phase. This requires having some way to iterate through all allocated objects, which in my experimental engine I’ve temporarily implemented as a C++ std::unordered_set (a hash map with no payload) which new objects are always added to. This should eventually be replaced with a custom allocator that knows how to iterate through its own objects, or something. :)

The stack

Traditional C/C++ programs store some of their local variables (those that aren’t optimized into registers) in a region of memory known as the stack: a pointer to the stack top is incremented and decremented as functions run to provide storage space, and the same stack is used to store return addresses for function calls (a cause of many security problems).

Some garbage collection systems like Boehm libgc take advantage of this, and scan the stack looking for object references to keep alive during the mark phase. Clever!

The WebAssembly environment has stricter memory safety: byte-addressable “linear memory” has a limited range and you can only access local variables in the stack frame through compile-time-addressed, pre-verified indexes. There’s no way to access a calling function’s stack frames to check what it had saved in its local variables…

When using the emscripten compiler, there is a second, more C/C++ like stack maintained inside the linear memory for variables and data structures that can’t fit in WebAssembly locals (for instance structure types larger than a single word, or anything that has its address taken and a pointer passed somewhere). Theoretically we could scan through that looking for our pointer values, but there may be false positives and that could be trouble — you wouldn’t want to accidentally try to traverse the object references inside a pointer that was actually a random integer or something!

Stacks, scopes, and smart pointers

For now I’ve implemented my own secondary stack which holds only encoded JS values, and modeled JS local variable bindings as C++ pointers to the value on the stack. When a garbage collection happens, the known globals and anything referenced on the stack are marked and traversed, so objects considered in use stay alive.

The way the stack is managed in the C++ engine code is inspired by V8’s Handle & HandleScope/EscapableHandleScope system. It requires a little bit of explicit discipline, and could probably be further improved in terms of the classes making usage correct, but seems to work correctly.

At the beginning of each function, a Scope or ScopeRetVal object is instantiated:

void someFunc() {

Scope scope;

// do stuff

}

This saves the stack position, and restores it to its original state when the function exits — so you don’t have to manually remember to pop. That’ll free up any of your locally allocated values for garbage collection.

Any given variable binding is either a ‘Binding’ (alias to Val*) to an existing, elsewhere-managed Val, or a ‘Local’ “smart pointer” object when compiles down to a pointer, but with a constructor that pushes a slot onto the stack.

Arguments can be passed as Local instances as well, constructed on the parent frame and eventually destructed with it, or if you know they’re GC-rooted already for sure you can pass a direct binding.

The big trick is return values, which I needed inspiration from V8 to figure out. If you try to return a Local, it’ll be cleaned up from your own scope before you return — and if you try to return a Val directly it won’t be GC-rooted and can disappear unexpectedly.

RetVal somefunc() {

ScopeRetVal scope;

// do stuff

return scope.escape(new String("keep it safe!"));

}

Functions return a RetVal which doesn’t push, instead of a Local which does. A variant of the Scope, here ScopeRetVal (V8 uses the awesomer name EscapableHandleScope!) first reserves space on the parent scope for that return value. When you return, you have the scope insert the return val into the saved slot, and then the destructor restores the stack to the saved spot _after_ the return value, safely still alive.

Without this trick, return values could end up de-allocated during code like:

var retval = a() + b();

translated to C++ as

Local retval;

*retval = *(a->call(Null(), {})) + *(b->call(Null(), {}));

where the return value from a() gets de-allocated during an allocation inside the call to b().

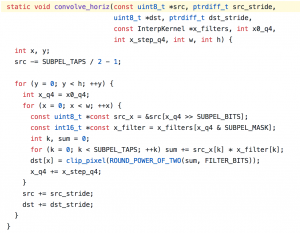

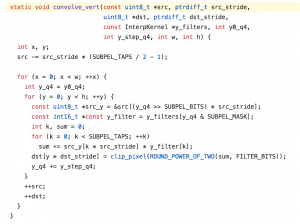

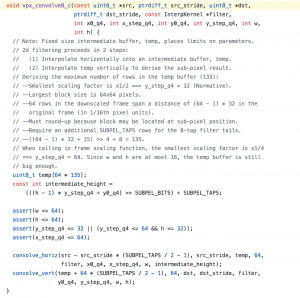

All the heavy lifting being in those two functions convolve_horiz and convolve_vert:

All the heavy lifting being in those two functions convolve_horiz and convolve_vert: