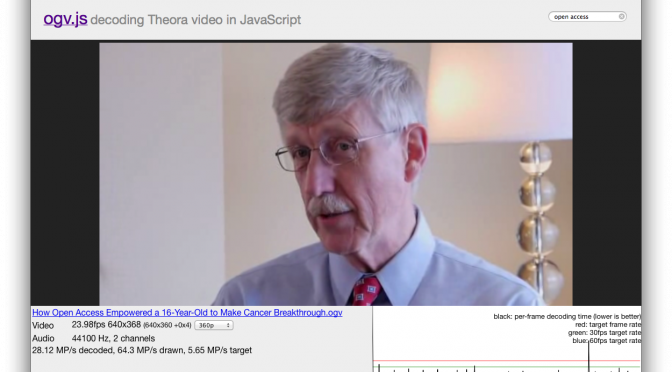

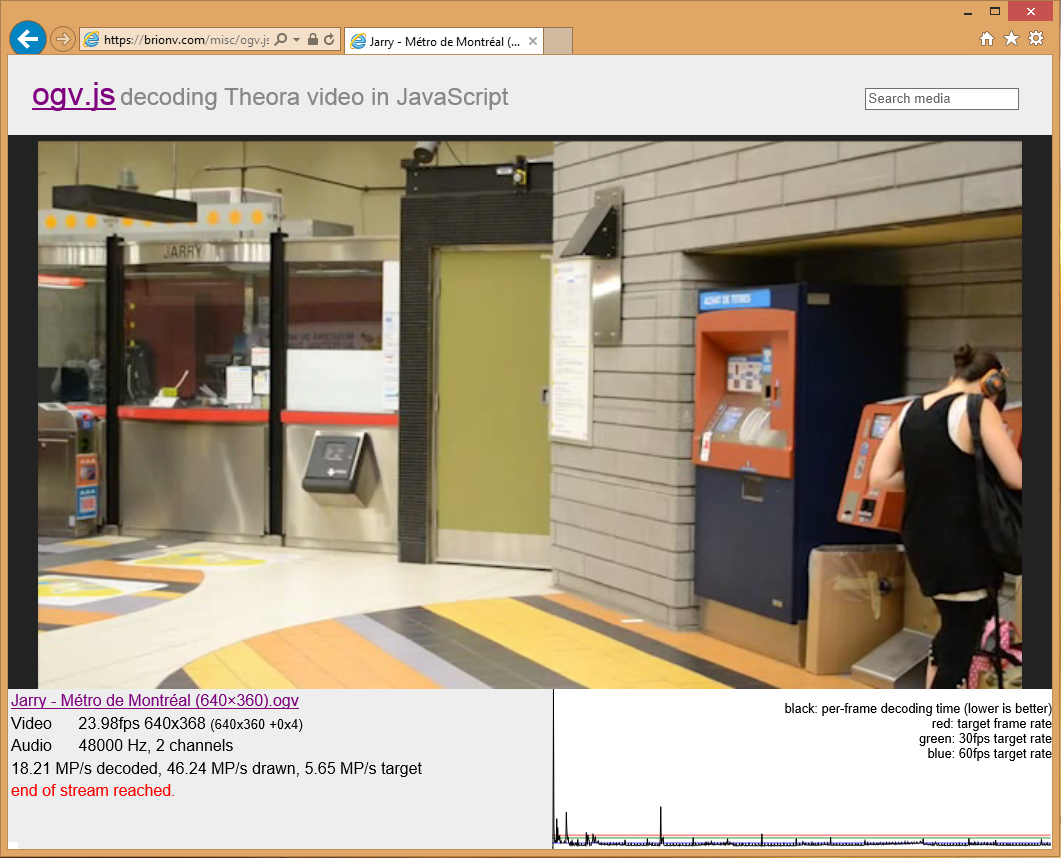

Two major updates to ogv.js Theora/Vorbis video/audio player in the last few weekends: an all-Flash decoder for older IE versions, and WebGL and Stage3D GPU acceleration for color conversion and drawing.

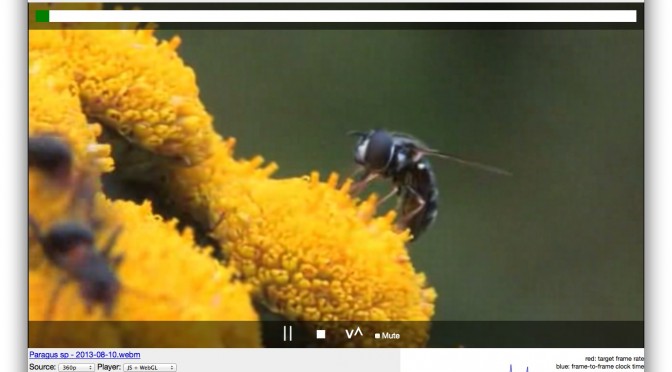

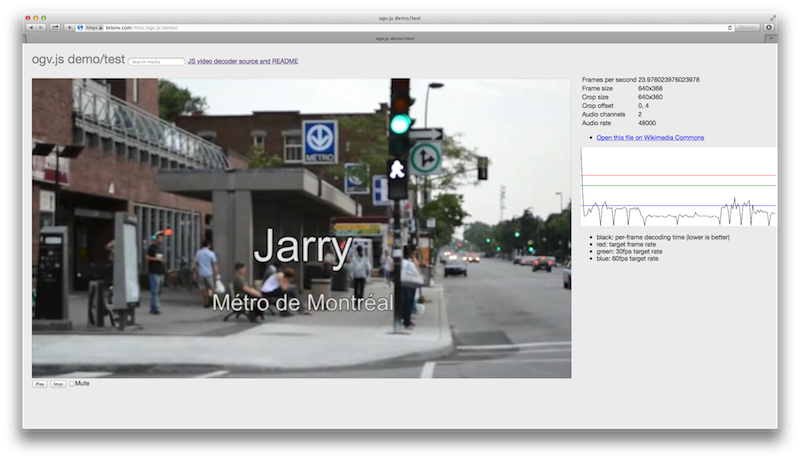

Try the demo and select ‘JS + WebGL‘, ‘Flash’, or even ‘Flash + GPU‘ from the drop-down! (You can also now try playback in the native video element or the old Cortado Java applet for comparison, though Cortado requires adding security exceptions if your browser works with Java at all these days. :P)

Flash / Crossbridge

The JS code output by emscripten requires some modern features like typed arrays, which aren’t available on IE 9 and older… but similar features exist in Flash’s ActionScript 3 virtual machine, and reasonably recent Flash plugins are often available on older IE installations.

The Flash target builds the core C codec libraries and the C side of the OgvJs codec wrapper using Crossbridge, the open-source version of Adobe’s FlasCC cross-compiler. This is similar in principle to the emscripten compiler used for the JavaScript target, but outputs ActionScript 3 bytecode instead of JavaScript source.

I then ported the JavaScript code for the codec interface and the player logic to ActionScript, wrapped it all up into a SWF using the Apache Flex mxmlc compiler, and wrapped the Flash player instantiation and communication logic in a JavaScript class with the same interface as the JS player.

A few interesting notes:

- Crossbridge compiler runs much slower than emscripten does, perhaps due to JVM startup costs in some backend tool. Running the configure scripts on the libraries is painfully slowwwwwwwwww! Luckily once they’re built they don’t have to be rebuilt often.

- There are only Mac and Windows builds of Crossbridge available; it may or may not be possible to build on Linux from source. :( I’ve only tested on Mac so far.

- Flash decoder performance is somewhere on par with Safari and usually a bit better than Internet Explorer’s JS.

- YCbCr to RGB conversion in ActionScript was initially about 10x slower than the JavaScript version. I mostly reduced this gap by moving the code from ActionScript to C and building it with the Crossbridge compiler… I’m not sure if something’s building with the wrong optimization settings or if the Crossbridge compiler just emits much cleaner bytecode for the same code. (And it was literally the same code — except for the function and variable declarations the AS and C code were literally identical!)

- ActionScript 3 is basically JavaScript extended with static type annotations and a class-based object system with Java-like inheritance and member visibility features. A lot of the conversion of JS code consisted only of adding type annotations or switching HTML/JS-style APIs for Flash/AS ones.

- JS’s typed array & ArrayBuffer system doesn’t quite map to the Flash interfaces. There’s a ByteArray which is kind of like a Uint8Array plus a data stream interface, with methods to read/write values of other types into the current position and advance it. There are also Vector types, which have an interface more like the traditional untyped Array but can only contain items of the given type and are more efficiently packed in memory.

GPU acceleration: WebGL

WebGL is a JavaScript API related to OpenGL ES 2, exposing some access to GPU programming and accelerated drawing of triangles onto a canvas.

Safari unfortunately doesn’t enable WebGL by default; it can be enabled as a developer option on Mac OS X but requires a device jailbreak on iOS.

However for IE 11, and the general fun of it, I suspected adding GPU acceleration might make sense! YCbCr to RGB conversion and drawing bytes to the canvas with putImageData() are both expensive, especially in IE. The GPU can perform the two operations together, and more importantly can massively parallelize the colorspace conversion.

I snagged the fragment shader from the Broadway H.264 player project’s WebGL accelerated canvas, and with a few tutorials, a little documentation, and a lot of trial and error I got accelerated drawing working in Firefox, Chrome, and Safari with WebGL manually enabled.

Then came time to run it on IE 11… unfortunately it turns out that single-channel luminance or alpha textures aren’t supported on IE 11’s WebGL — you must upload all textures as RGB or RGBA.

Copying data from 1-byte-per-pixel arrays to a 3- or 4-byte-per-pixel array and then uploading that turned out to be horribly slow, especially as IE’s typed array ‘set’ method and copy constructor seem to be hideously slow. It was clear this was not going to work.

I devised a method of uploading the 1-byte-per-pixel arrays as pseudo-RGBA textures of 1/4 their actual width, then unpacking the subpixels from the channels in the fragment shader.

The unpacking is done by adding two more textures: “stripe” textures at the luma and chroma resolutions, which for each pixel have a 100% brightness in the channel for the matching packed subpixel. For each output pixel, we sample both the packed Y, Cb, or Cr texture and the matching-size stripe texture, then multiple the vectors and sum the components to fold down only the relevant channel into a scalar value.

It feels kinda icky, but it seems to run just as fast at least on my good hardware, and works in IE 11 as well as the other browsers.

On Firefox with asm.js-optimized Theora decoding and WebGL-optimized drawing, I can actually watch 720p and some 1080p videos at full speed. Nice! (Of course, Firefox can already use its native decoder which is faster still…)

A few gotchas with WebGL:

- There’s a lot of boilerplate you have to do to get anything running; spend lots of time reading those tutorials that start with a 2d triangle or a rectangle until it makes sense!

- Once you’ve used a canvas element for 2d rendering, you can’t switch it to a WebGL canvas. It’ll just fail silently…

- Creating new textures is a lot slower than uploading new data to an existing texture of the same size.

- Error checking can be expensive because it halts the GPU pipeline; this significantly slows down the rendering in Chrome. Turn it off once code is working…

GPU acceleration: Flash / Stage3D

Once I had things working so nicely with WebGL, the Flash version started to feel left out — couldn’t it get some GPU love too?

Well luckily, Flash has an OpenGL ES-like API as well: Stage3D.

Unluckily, Stage3D and WebGL are gratuitously different in API signatures. Meh, I can work with that.

Really unluckily, Stage3D doesn’t include a runtime shader compiler.

You’re apparently expected to write shaders in a low-level assembly language, AGAL… and manually grab an “AGALMiniAssembler” class and stick it in your code to compile that into AGAL bytecode. What?

Luckily there’s also a glsl2agal converter, so I was able to avoid rewriting the shaders from the WebGL version in AGAL manually. Yay! This required some additional manual hoop-jumping to make sure variables mapped to the right registers, but the glsl2agal compiler makes the mappings available in its output so that wasn’t too bad.

Some gotchas with Stage3D:

- The AS3 documentation pages for Stage3D classes don’t show all the class members in Firefox. No, really, I had to read those pages in Chrome. WTF?

- Texture dimensions must be powers of 2, so I have to copy rows of bytes from the Crossbridge C heap into a temporary array of the right size before uploading to the GPU. Luckily copying byte arrays is much faster in Flash than in IE’s JS!

- As with the 2d BitmapData interface, Flash prefers BGR over RGB. Just had to flip the order of bytes in the stripe texture generation.

- Certain classes of errors will crash Flash and IE together. Nice!

- glsl2agal compiler forced my texture sampling to linear interpolation with wrapping; I had to do a string replace on the generated AGAL source to set it to nearest neighbor & clamped.

- There doesn’t appear to be a simple way to fix the Stage3D backing buffer size and scale it up to fit the window, at least in the modes I’m using it. I’m instead handling resizing by setting the backing buffer to the size of the stage, and just letting the texture render larger. This unfortunately uses nearest-neighbor for the scaling because I had to disable linear sampling to do the channel-packing trick.

- On my old Atom-based tablet, Stage3D drawing is actually slower than software conversion and rendering. D’oh! Other machines seem faster, and about on par with WebGL.

I’ll do a little more performance tweaking, but it’s starting to look like it’s time to clean it up and try integrating with our media playback tools on MediaWiki… Wheeeee!